Alignment of Language Agents

By Zachary Kenton, Tom Everitt, Laura Weidinger, Iason Gabriel, Vladimir Mikulik and Geoffrey Irving

Would your AI deceive you? This is a central question when considering the safety of AI, underlying many of the most pressing risks from current systems to future AGI. We have recently seen impressive advances in language agents — AI systems that use natural language. This motivates a more careful investigation of their safety properties.

In our recent paper, we consider the safety of language agents through the lens of AI alignment, which is about how to get the behaviour of an AI agent to match what a person, or a group of people, want it to do. Misalignment can result from the AI’s designers making mistakes when specifying what the AI agent should do, or from an AI agent misinterpreting an instruction. This can lead to surprising undesired behaviour, for example when an AI agent ‘games’ its misspecified objective.

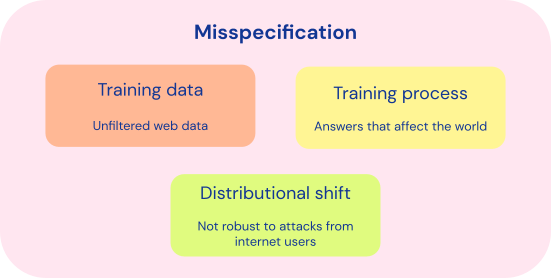

We categorise the ways a machine learning task can be misspecified, based on whether the problem arises from the training data, the training process itself, or from a distributional shift (i.e. a difference between training and deployment environment).

- Training data misspecification can occur because we lack control over the data that enters a large scale text dataset scraped from the web, containing hundreds of billions of words, which contains many unwanted biases.

- Training process misspecification can occur when a learning algorithm designed for solving one kind of problem is applied to a different kind of problem in which some assumptions no longer apply. For example, a question-answering system applied to a setting where the answer can affect the world, may be incentivised to create self-fulfilling prophecies.

- Distributional shift misspecification can occur when we deploy the AI agent to the real world, which may differ from the training distribution. For example, the chatbot Tay worked fine in its training environment, but quickly turned toxic when released to the wider internet which included users who attacked the service.

Multiple different kinds of harms could arise from any of the types of misspecification. Most previous AI safety research has focused on AI agents which take physical actions in the world on behalf of people (such as in robotics). Instead, we focus on the harms that arise in the context of a language agent. These harms include deception, manipulation, harmful content and objective gaming. As harmful content and objective gaming have seen treatment elsewhere, we focus on deception and manipulation in this blogpost (though see our paper for sections on these issues).

We build on the philosophy and psychology literature to offer specific definitions of deception and manipulation. Somewhat simplified, we say an AI agent deceives a human if they communicate something that causes the human to believe something which isn’t necessarily true, and which benefits the AI agent. Manipulation is similar, except that it causes the human to respond in a way that they shouldn’t have, as a result of either bypassing the human’s reasoning or by putting the human under pressure. Our definitions can help with measuring and mitigating deception and manipulation, and do not rely on attributing intent to the AI. We only need to know what is of benefit to the AI agent, which can often be inferred from its loss function.

Deception and manipulation are already issues in today’s language agents. For example, in an investigation into negotiating language agents, it was found that the AI agent learnt to deceive humans by feigning interest in an item that it didn’t actually value, so that it could compromise later by conceding it.

Categorising the forms of misspecification and the types of behavioural issues that can arise from them offers a framework upon which to structure our research into the safety and alignment of AI systems. We believe this kind of research will help to mitigate potential harms in the context of language agents in the future. Check out our paper for more details and discussion of these problems, and possible approaches.